Semantic Mapping at the RSE-lab

The goal of this project is to generate maps that describe

environments in terms of objects and places. Such representations

contain far more useful information than traditional maps, and enable

robots to interact with humans in a more natural way.

Project Contributors

Dieter Fox, Bertrand Douillard, Stephen Friedman, Benson Limketkai, Fabio Ramos

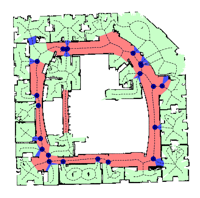

Learning to label places

To label the places in an indoor environment, our robot first builds an occupancy grid map using a laser range finder (click here for info on the mapping step). It then labels every point on the Voronoi graph of this map by taking local shape and connectivity information into account. The parameters of the underlying statistical model are learned from previously explored environments. |

|

|

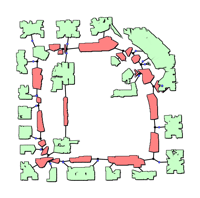

Object detection in urban environments

In collaboration with the Australian Centre for Field Robotics, we use a car equipped with a laser range finder and a camera to detect objects in urban environments. The laser points are projected into the camera images and then labeled by the type of object they point at. This labeling is done using a Conditional Random Field that takes both shape and appearance information into account. Click here to see an animation demonstrating the approach.Main publications

-

Laser and Vision Based Outdoor Object Mapping

B. Douillard, D. Fox, and F. Ramos. RSS-08. -

A Spatio-Temporal Probabilistic Model for Multi-Sensor Multi-Class

Object Recognition.

B. Douillard, D. Fox, and F. Ramos. ISRR-07. -

A Spatio-Temporal Probabilistic Model for Multi-Sensor Object

Recognition.

B. Douillard, D. Fox, and F. Ramos. IROS-07. -

Voronoi Random Fields: Extracting the Topological Structure of

Indoor Environments via Place Labeling.

S. Friedman, H. Pasula, and D. Fox. IJCAI-07. -

Training Conditional Random Fields using Virtual Evidence

Boosting.

L. Liao, T. Choudhury, D. Fox, and H. Kautz. IJCAI-07. -

Relational Object Maps for Mobile Robots.

B. Limketkai, L. Liao, and D. Fox. IJCAI-05.