Image

Analogies

By Jiun-Hung Chen and Vaishnavi Sannidhanam

CSE 557

Winter 2004

{jhchen, vaishu}@cs

1.

Introduction

1.1 Motivation

One of the main

tasks that captured us during the impressionist project was the concept of

automatic painting. However, the automatic painting we implemented in the

course of the impressionist project lacked a way to paint in different styles.

It was an algorithm that implemented random brush strokes till the entire

picture was covered. Hence, the concept of using analogies, which are so

intuitive to humans, over sets of images, seemed really intriguing. The concept

of image analogies seems to give artificial intelligence to images in the way

that one image can transform into another based on some set of parameters

1.1.1 Problem

Statement

Given a

pair of images A and A’ we can create the effect of A’ on A, over the target

image B which can be rendered as B’

A:A’ :: B:B’

1.1.2 Algorithm

“Function CreateImageAnalogy(A, A’, B):

Compute Gaussian pyramids for A, A’

and B

Compute features for A, A’,

and B

For each l from coarsest to finest,

do

For each pixel q in Bl’

in scan-line order do:

p ß BestMatch(A,A’, B, B’,s,l,q)

Bl’(q) ß Al’(p)

sl(q) ß p

Return BL’

Function BestMatch(A, A’, B, B’, s, l, q):

papp

ß BestApproximateMatch(A, A’, B, B’, l, q)

pcoh

ß BestCoherenceMatch(A, A’, B, B’, s, l, q)

dapp

ß ||Fl(papp)

– Fl(q)||2

dcoh ß ||Fl(pcoh)

– Fl(q)||2

If dcoh

<= dapp(1+2l-Lk) then

Return pcoh

Else

Return papp” [2]

Where,

A,

A’ and B are the images

l is the present level

L is the total number of levels that

a Gaussian pyramid has been applied

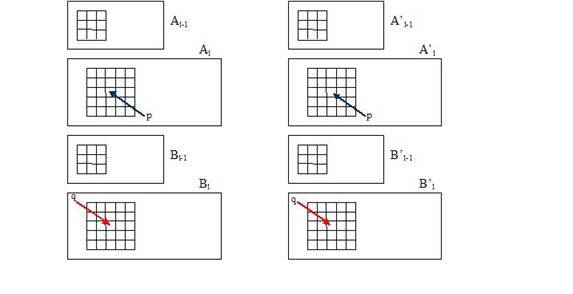

q is the pixel in Bl’ in scan line order

p is the pixel in A’ that best matches q

sl(q) is the pixel in A’ that best matches q

papp is the pixel that BestApproximationMatch returns

pcoh is the pixel that BestCoherenceMatch returns

dapp

is the error

by which papp approximates

q

dcoh is the error by which pcoh approximates q

k is the coherence parameter

BestApproximationMatch

Finds the closest matching pixel for

a given q, based on feature vectors and neighborhoods

BestCoherenceMatch

Finds the closest matching pixel for

a given q while attempting to preserve coherence with the neighboring

synthesized pixels

Figure1: Neighborhood Matching

![]()

2.1 Toy

Filters

Though toy filters are very easy to generate

using traditional image filters (like Gaussian), this gives us an idea of how

Image Analogies can work even on the most basic aspects of image processing.

One of the toy filters we were able to generate was the embossing effect

Figure 2: Emboss

2.2 Artistic

Filters

Artistic Filters can generate Water Color,

Pastel and Line art styled images. Once we have a <photo, paint>

pair, we can generate many paintings out of any given photo

Figure 3: Water Color

Figure 4: Pastel Rendering

Figure

5: Line Rendering

2.3 Texture

by Numbers

The idea

behind texture by numbering is to simulate new image based on the intensity

mappings of A and B along with the Color of A’. A new realistic image can be

synthesized by using this process. (The following Sphinx (B’) is generated only

on the coarsest level and hence does not seem as realistic. Discussion on

pyramid synthesis/level synthesis can be found in later sections)

Figure

6: Texture by Numbers

2.4 Exemplar

Based Surface Texture

The idea

behind exemplar based surface texture is the usage of normal maps in place of photos

for A and B over a 3D region. A is usually a sphere or an ellipsoid normal

mapped, while B is the interesting surface. Then the texture on A’ will be

mapped onto B’. Here again, A’ will be the texture over an ellipsoid or a

sphere. Mostly ellipsoids or spheres are used because of their property to

smoothly transition from one normal to the next.

Figure

7(a): Exemplar based surface texture on Meat Ball

The following textures on the human face were generated by

changing the values of A’

Figure 7(b): Figure 7(a): Exemplar based surface texture (Left-Top –

using Blue Clay, Right-Top – using tennis ball, Left-Bottom – using potato and

Right-Bottom – using Chocolate)

2.4.1 Lighting Variation

We can use

a 3D sphere or an ellipsoid-that is normal mapped as our A, the sphere itself

with some lighting variation on it as our A’ and a normal mapped interesting 3D

surface as our B. Then we can simulate lighting variation on B to get B’.

Figure

8: Light Variation and Exemplar Based Surface Texture

Video 1: Light

Variation

2.4.2 Shadow Variation

Video 2: Shadow Variation

3. Analysis

Different Coherence Parameters

dapp <= dcoh (1+2l-L * k)

Where,

dapp is the error that BestApproximationMatch returns

dcoh is the error that BestCoherenceMatch returns

k is Coherence parameter

As k value increases

the range in which coherence term gets selected as the best match increases,

increasing the probability that coherence match is chosen over approximation

match/accuracy.

And hence,

higher k values result in B’ having more properties as that of A’

Figure

9: Variation in coherence parameter

Single vs. Multi Level of Renderings

Multi level

rendered images tend to have more detail than single level rendered images due to

the fact of smoothing out information as we go more towards coarse levels.

As we know,

when we filter images the higher frequency data is smoothened. Hence, when we

want to generate details or scaling effects on the image we need to combine the

information stored in various levels. However, if we just use a single level,

there will be loss of information and hence this will result in less precision.

Figure

10: Variation in the number of levels used to render the image

3. Conclusion

There are a few drawbacks of our implementation of Image Analogies. One of them being that it is slow, which can be improved by using some kind of data structure to do a nearest neighbor search. And another drawback of Image Analogies is that each feature needs a different tweak in the Image Analogy algorithm to get the desired results. However, as we can see from all the above generated pictures that “Image Analogies” is a very simple and intuitive tool to apply and retrieve various textures and properties to images. We could also not only generate textures on 2D images, but also on 3D versions. Image Analogies can be used to simulate lighting and shadow effects to create new movies based on the old ones.

4. Acknowledgements

We want to

extend our special thanks to Brian Curless for explaining the concepts and

advising on various issues during the course of this project and also for

providing us with a face model. We would also like to thank Ian Simon for

responding back to our various queries quickly and succinctly.

5. References

[1] “Exemplar

Based Surface Texture", A. Haro, and

[2] “Image

Analogies”, Aaron Hertzmann, Charles E. Jacobs, Nuria

Oliver, Brian Curless, and David H. Salesin. SIGGRAPH 2001,

[3] http://www.150.si.edu/images/8miki.jpg

[4] http://www.cc.gatech.edu/cpl/projects/surfacetexture/

[5] http://www.cs.washington.edu

[6] http://www.fpaota.org/fruits%20&%20vegetables/grapes.2.jpg

-- grapes

[7] http://www.mrl.nyu.edu/projects/image-analogies/

[8] http://www.printphoto.com/contest_pics/finalist0802/tacoma%20fireworks.jpg -- fireworks

[9] http://image.gsfc.nasa.gov/poetry/movies/movies.html

-- movies

[11] http://www.opl.ucsb.edu/grace/nzweb/pics/forest.jpg

-- forest

[12] http://www.worldalmanacforkids.com/explore/images/art-nations-sphinx.jpg

-- sphinx

NOTE: Images for B’s

are taken from the above web sites, however, B’ are

generated.