|

|

|

|

|

|

To see our project in action please select either Option 1 or Option 2 to download the exectuable and run the application yourself!

|

Download Sound Animator |

|||

| File | Size | Description | |

| Option 1: | anisound_full.zip | 20.05 MB | Includes animator.exe, required .dlls, example animation scripts, example sound files. |

| Option 2: | Part 1: anisound.zip | 1.04 MB | Includes animator.exe, required .dlls and example animations scripts |

| Part 2: sounds.zip | 19.5 MB | Includes example sound files | |

Note that there are some known issues with the current version of this application: mainly that the pause/playback functionality does not work properly once a wave file is loaded. I had this working for a while, then made some changes and screwed it up again and never had a chance to go back and fix it. Other than this annoyance, the application should work with basically any .wav file (it's been tested most often with 16bit, 44100Hz, stereo sound wave files). |

Based on our experiences with the previous CSE557 animator project, we knew how difficult it was to to manually align motion to sound. So, our goal in this project was to create an animation system that used sound (e.g. .wav music files) to influence motion (to perhaps alleviate some of the pains of hand animation -- if nothing else to integrate support for sound into the animator skeleton). We use known sound analysis techniques including FFT and beat analysis to activate time sensitive, motion cycles in our animations. This is somewhat analogous to the music visualization systems found in Winamp and Windows Media Player.

Given the 2-week limitation of the final project, our initial ambitions were rather modest: simply to use certain salient features of sound data to influence motion. We decided to analyze three key features of sound: beat analysis, frequency distributions, and sound magnitude thresholds.

| The beat detector attempts to look at patterns in sound

magnitude thresholds over time. A 2D volume meter was created to visualize

current mono or dual-channel sound magnitudes which provided real-time

feedback about channel intensities. This was useful in providing visual

feedback about our beat analyzer (a bit of ground truth as it were). The drums were created by setting a beat threshold; whenever this threshold was exceeded the mallet animation motion cycle was triggered. |

|

| We used fftw to compute a one-dimensional discrete fourier

transform (DFT) of sound data. The DFT algorithm is used to transform analog

data from the time domain to the frequency domain. See

Wikipedia

for more information. The piano was created by assigning each white key ownership of a given frequency range. The intensity at this frequency range affected the color and depression of the key. At an intensity of zero the key was displayed in white and at full height. At full normalized intensity (1.0), the key was shown as dark red and fully depressed. |

|

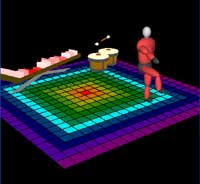

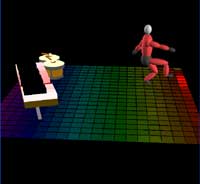

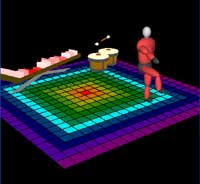

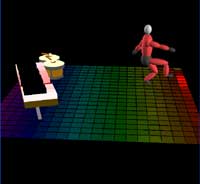

Finally some really basic sound animation triggers were established to give our animation some variability. The floor had three basic layouts: a linear frequency bin layout, a ring layout, a pseudo-random rainbow layout. These layouts were switched dynamically depending on a sound amplitude threshold. If this threshold was exceeded (and N frames had been rendered since the last trigger) then the layout was switched.

(above) The Linear Frequency Bin Layout

(above) The Pseudo-Random Frequency Bin Rainbow Layout

(above) The Frequency Ring Layout

1. We used fftw for our frequency analysis:

http://www.fftw.org

2. We used libsndfile for our wave i/o:

http://www.mega-nerd.com/libsndfile/

3. We used fltk for our GUI framework:

http://www.fltk.org (note that our .exe provided above is only for Windows!)

4. A plethora of analog signal processing/fft websites, news groups, etc.