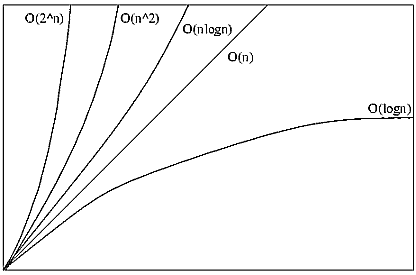

The "asymptotic complexity" of an algorithm is simply a measure of how rapidly its running time grows as the size of the input approaches infinity. We measure complexity using "big-O notation":

f(n) = O(g(n))

indicates that f(n) is bounded by g(n) times a constant factor, for sufficiently large n. In other words, if f(n) is O(g(n)), g(n) is "bigger than or equal to" f(n).

We use O(f(n)) notation because, among other things, performance over small inputs is basically not very important. Basically, everything is fast on very small data sets. It's large data sets that we care about.

Which of the following statements is true?

- n2 = O(n)

- logn = O(log(logn))

- 5n = O(3n)

- logn = O(sqrt(n))

- n = O(2n)

(Note: diagram is not to scale.)