Eigenfaces Artifact

Cody Andrews

CSE 455 Computer Vision

Project 4

Average Face + 10 Eigenfaces

Faces Recognized vs Number of Eigenfaces Used (of 33)

Recognition Mistakes

Smiling 1 recognized as nonsmiling 16 - Correct sorted position: 5

Smiling 2 recognized as nonsmiling 27 - Correct sorted position: 2

Smiling 4 recognized as nonsmiling 25 - Correct sorted position: 4

Smiling 9 recognized as nonsmiling 31 - Correct sorted position: 2

Smiling 15 recognized as nonsmiling 24 - Correct sorted position: Not in top 5

Smiling 17 recognized as nonsmiling 19 - Correct sorted position: 2

Smiling 18 recognized as nonsmiling 24 - Correct sorted position: 3

Smiling 22 recognized as nonsmiling 16 - Correct sorted position: 3

Smiling 23 recognized as nonsmiling 11 - Correct sorted position: 2

Smiling 24 recognized as nonsmiling 18 - Correct sorted position: 3

Testing Recognition Discussion

From the number chart above, it is reasonably clear that beyond 10 eigenfaces,

there is effectively zero increase or decrease in the number of faces correctly matched

by the recognizeFace method. Because using more eigenfaces requires more memory and time,

10 eigenfaces is the optimal choice for this project.

Most of the errors were fairly reasonable with similar faces, all except for smiling 4 being recognized as nonsmiling 25.

It seems his wild expression was too just too different for the system to handle it. When an image was matched incorrectly,

the program was usually very close. In the majority of these images, they were the 2nd or 3rd best.

Cropping elf.tga

Image Marked (min_scale=0.45, max_scale=0.55, step=0.01):

Scoremap for scale=0.55 (darker areas are better matches):

Cropped Image:

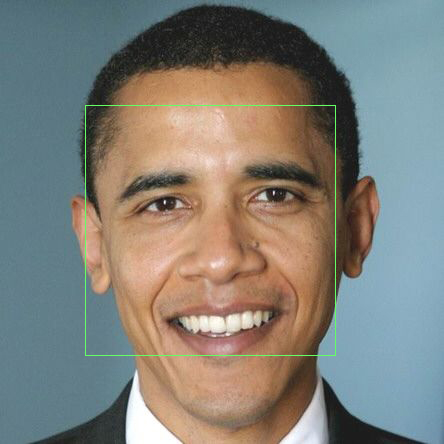

Cropping Obama.tga

Marked Image (min_scale=0.01, max_scale=0.10, step=0.01):

Result Image:

Finding faces in group3.tga

Marked Image(min_scale=0.50, max_scale=0.60, step=0.01):

Scoremap for scale=0.60 (darker areas are better matches):

Finding faces in a group of friends

Marked Image (min_scale=0.30, max_scale=0.50, step=0.02):

Scoremap for scale=0.50 (darker areas are better matches):

Finding Faces Discussion

My face finder uses two Mean Square Error scores to calculate the positions of each face. One is the MSE to the face plane,

the other is of a highly simplified skin classifier.

To generate these MSEs, a square rectangle of

pixels was sampled at every position in the image. For each of these squares, the MSE between it and its

projection to the face plane was calculated. Then, the MSE of this square to the "average skin color"

(rgb: 177,120,91) was calculated, and multiplied by the first MSE. This combined MSE value for every pixel

is what is shown in the "Scoremap" images above. At points where the map is darkest, my algorithm will find those

as faces.

Before I implemented the skin detection, my output was horrible. It matched almost none of the faces in every image.

It seems that the initial MSE calculation, while it did have local minima around faces, also showed a strong preference

towards areas with low texture, like people's shirts, the whiteboard in the background of group3.tga, and the wall in

the image of my friends. Adding skin detection helped significantly, but did not completely override the first MSE's

preference.

Verify Face Testing

This plot shows a comparison of MSE values between a face and itself, and a face with another face.

The blue bars shows the MSE when verifyFace is called on an image with itself.

The red bars shows the MSE when verifyFace is called on an image with another image.

A good threshold is one that maximizes what is under the red bars, but above the blue bars, and in this case,

I chose 3000, which is shown as the green bar on the graph.

The false positive rate for these 10 faces using a threshhold of 3000 is 10%.

The false negative rate for these 10 faces using a threshhold of 3000 is 30%.

Extra Credit

Face Morphing: To morph a face partially between another, I just multiplied the coefficients of the projected faces by their weight,

and reconstructed them.

Animation:

Skin Detection: I used a primitive form of skin detection which only compared skin to one skin color (rgb: 177,120,91).

The MSE to this color was calculated for every box that was used in the normal algorithm, and then was multiplied by the

face projection MSE to get a skin-weighted MSE. Although this works fairly well against people with lighter skin, this will

fail to work against people with darker skin, or in dark or very bright images.

*The template for this webpage was borrowed from Andy Hou