Methodology

I computed 10 eigenfaces from the croppped, non-smiling students faces and constructed a userbase.

And then I use them to recognize the cropped, smiling students faces, generating a result with accuracy of 23/33.

Average face:

Ten eigen faces:

Furthermore, I experimented with the number of eigen faces used from 1 to 33, at a granularity of 2. The curve is shown below.

Note: The y-axis is number of faces recognized. The x-axis is number of eigenfaces used.

Questions

Question 1: It grows very fast at the very beginning at then stays at a rather static value, which is around 22 and 23. More eigen faces mean more accurate face reconstuction, however, we will need more time to compare two different faces. I think 7 to 12 eigen faces are appropriate. There is actually no clear answer since it really depends on number of faces in the whole data set and how much faces differ from each other.

Question 2: Most mistakes are reasonable and usually the correct answers appear in the first three rankings.

Two set of error correspondances are as follows.

The input smiling face:

The wrong corresponding face.

The correct corresponding face.

We can see that the correct one is actually very different from the input. The smiling face is with one eye closed and teeth also make a big difference.

The input smiling face:

The wrong corresponding face.

The correct corresponding face.

We can see that the face angel of the correct one is very different from that of the original face.

Methodology

I use 10 eigen faces file to run --findface to crop the elf.tga image, using min_scale, max_scale, step parameters of 0.45, 0.55, 0.01. And then I got a baby face! The two images are as follows

I find a digital picture of myself and crop it. Then I got my face.

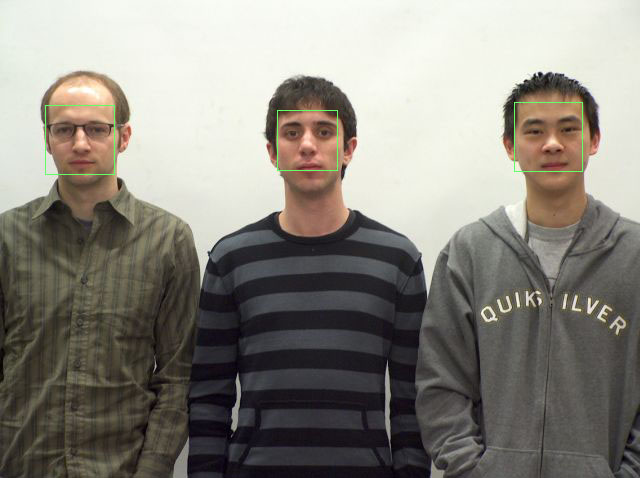

I used group1.tga image as input and I successfully found 3 faces.

I used another group image with 4 people as input and I found 3 faces of them.

Questions

Question 1:

The min_scale and max_scale I used for the baby image is 0.45 and 0.55 respectively.

The min_scale and max_scale I used for my solo picture is 0.12 and 0.22 respectively.

The min_scale and max_scale I used for the 3-people image is 0.3 and 0.4 respectively.

The min_scale and max_scale I used for the 4-people image is 0.6 and 0.7 respectively.

Question 2:

From the pictures above, we can see that I wrongly squared a face in the 4-people image, where I marked a square in a girl's hands and her red clothes instead of the girl's face.

Recalling my code, since I didn't use color cues to recognize faces, instead I just use MSE which is not enough to deal with something that looks like a face but with completely wrong colors. Therefore, I believe that the red color of the clothes will become a cue for the program to get rid of this face if color cues were implemented in my code.

The reason why it didn't square the girl's face may be that the girl's hair kind of shaded one fourth of her face and when we convert a colored image to a gray image, "black" will correspond to a value which is close to zero, thus making the MSE(error) larger.

Discussion

the image below is from the previous class, there are 32 people in this image. My program found 15 faces in this image while regarding some whole-white subimage, some table and wall edges as faces. It is reasonable because I used pure MSE as the error of a square without color cues.

Worth mentioning, instead of totally preventing squares overlapping, I defined an allowance variable which allows a little amount of overlapping region between two squares. It works well when people's faces' distance from each other is too small and contributes to a stuation that a big nonsense square occupies a big region so that some faces which overlap a little with the big square won't be considered as "good" faces, although it doesn't show its strength in this case.

Sample Results

main --findface group\oldclass_neutral.tga eigenface .6 .9 .01 mark 32 oldclass_result.tga

Methodology

I computed 6 eigen faces from the cropped, non-smiling students images and constructed a userbase.

Questions

Question 1:

And then I run "main --verifyface" for tons of time to gain a bunch of experimental data. I have tried seven different MSEs, which are 60000, 120000, 90000, 75000, 82500, 78750, 76500.

The results are as follows: (First rate is the rate of correct face recognition, second rate is the rate of wrong recognization)

60000 (20/33, 1/96)

120000 (29/33, 4/96)

90000 (28/33, 3/96)

75000 (23/33, 1/96)

82500 (26/33, 3/96)

78750 (26/33, 3/96)

76500 (25/33, 2/96)

From my perspective, 76500 works the best.(Since we really don't want to wrongly recognize somebody, I regard the second rate as a more important one.)

I used binary search method to find it.

Question 2.

As we can see from the data above, for the best MSE threshold, the false negative rate is 2/96(roughly 2%) and the false positive rate is 8/33(24%).

Discussion

For some cases, it's really impossible to verify them. The two images below are a neutral face and a smiling one from a student in our class. It is far from being able to be verified. I think it's beyond our control just by using the knowledge taught in this class.