EigenFaces

Jack Menzel

This was the average face:

These were the eigenfaces (displayed here in decreasing order from left to

right):

The top row of the following table shows what the images were derived from. The

bottom row is the faces which will be identified.

| 0 |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

16 |

17 |

18 |

19 |

20 |

21 |

22 |

23 |

24 |

25 |

26 |

27 |

28 |

29 |

30 |

31 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

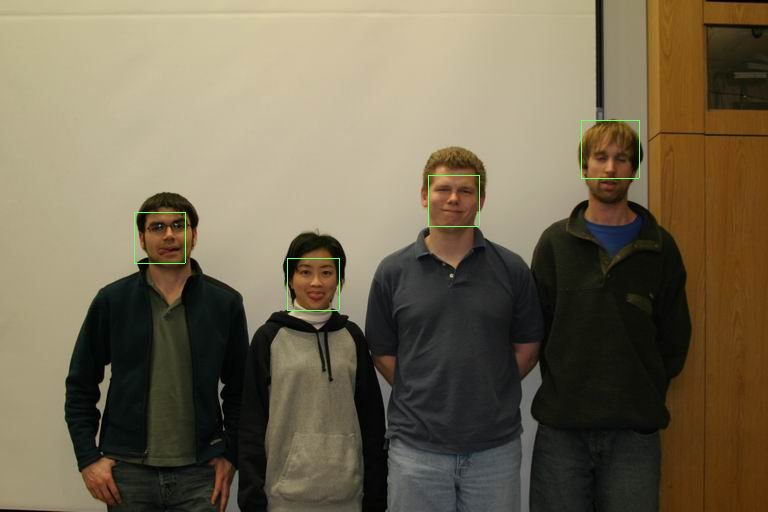

Identifying faces

However when I applied this algorithm chart plots the results.

Notice how after about 18 eigenfaces no additional faces are successfully

identified with each face you add. Examples of faces that could never be

successfully identified are:

MISS 00 incorrectly identified as 02

MISS 01 incorrectly identified as 31

MISS 03 incorrectly identified as 31

MISS 05 incorrectly identified as 11

MISS 06 incorrectly identified as 30

MISS 07 incorrectly identified as 18

MISS 08 incorrectly identified as 14

MISS 10 incorrectly identified as 31

MISS 13 incorrectly identified as 18

MISS 15 incorrectly identified as 11

MISS 19 incorrectly identified as 17

MISS 21 incorrectly identified as 22

MISS 23 incorrectly identified as 14

Examining these misses shows that almost all of these images fall contain

either change in shape (a result of folks opening their mouths very wide) or

rotation. One notable exception was 8 being identified as 14 which was caused

by the fact that these two people really do look _very_ similar (At first I

thought there was a duplicate face in the data. Only after looking closely

could I discern the fact that they were two different people).

Out of the 12 misses only 2 could be found in the top 5. This suggests that my current implementation

of this algorithm really doesn't handle rotation/change of shape well at all.

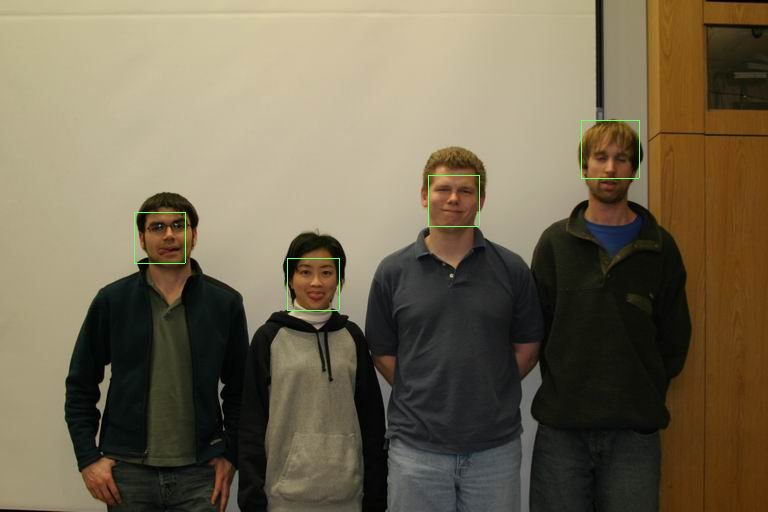

Finding Faces

My finding faces implementation is the standard implementation of My

implementation was successfully able to find the president's face in this

image:

However that was with the color heuristic turned off. Turning the color

heuristic (1 standard devaition) still gives you a face. However it did so in a

peculiar and seemingly pseudo-artistic heart of darkness reminiscent manner

which I actually found very appropriate:

I think the reason my find face implementation likes to crop faces off center

can be explained by the fact that I divide the error of the reconstructed face

by the variance. So if your actual face match is weak then you may end up

getting half of the face and half background.

My implementation was reasonably successful at identifying faces. It is

basically the standard implementation. Areas of low texture are addressed by

multiplying mse of the area in question with the reconstructed face, taking the

mse of this, and then in order to address areas of low texture or areas close

to the face space but not face-like the mse is multiplied by the difference to

the average and divided by the variance.

In order to improve the speed of the algorithm and to reduce the probability

that the algorithm would choose areas that partially overlap with faces, very

face like areas with high variance, I also implemented a simple face color

detection algorithm. The results of which can be seen below.

The way this works is that when the training faces are analyzed for the

creation of the eigen faces the program calculates the average and standard

deviation of the R,G,and B values of the training data. These values are then

stored in the .face file for later use in find faces.

The average and standard deviation color data of the training faces is then

used to reject pixels in find faces. There are three heuristics that make use

of this. First a pixel is only considered if the difference from the average

falls within the standard deviation for that color multiplied by a threshold

value specified on the command line.

Secondly the average RGB colors of the face region being tested should also

fall within standard deviation multiplied by threshold.

Finally the difference difference from the average colors is multiplied by the

mse such that the algorithm will favor faces that on average are closer to the

average face color.

In practice this works great for the images taken in class! The

algorithm is able to very easily find most face pixels with very little error

(see skin image below). When using a threshold value of 0.5 this resulted in a

30 fold increase in the speed of the algorithm and increased accuracy by ruling

out areas like hands which have face like characteristics but incorrect color.

(Image generated with the following params: --findface group\IMG_8270.tga

eig10.face 0.2 0.5 0.01 mark 4 imageres.tga 0.75)

This is the resulting error image where light areas are possible faces.

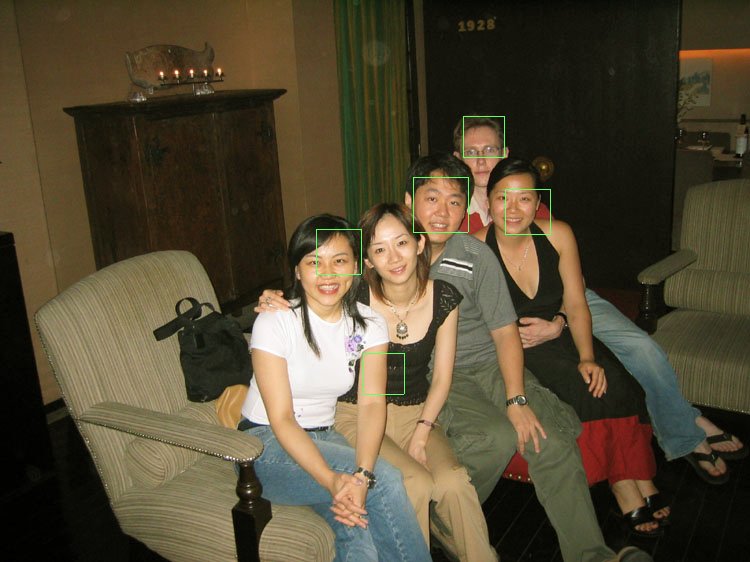

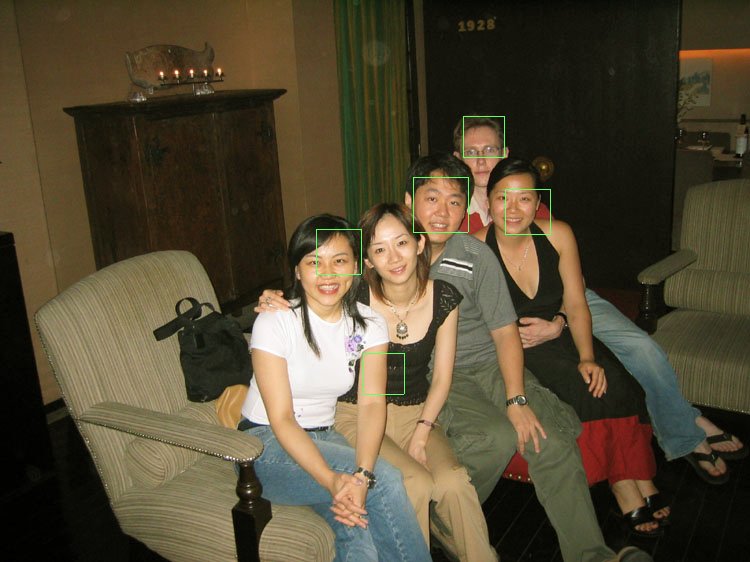

However when I tried running the same algorithm on images not taken in the

classroom I got some very surprising results. The color matching algorithm

started to do the opposite of what was expected. It started to return all

pixels that weren't skin! Even when choosing images shot indoors under low

light like the classroom images. Disabling the color heuristic makes the

application take 30x longer to run however it can still find most faces!

(Generated using --findface group.tga eig10.face .2 .6 .05 mark 5 groupres.tga

5000)

It only missed one face and I would guess that is because her head is tilted

which tends to confuse the algorithm.

This image shows how skin detection totally doesn't work here at all:

On other images the results were not as bad, however they were by no means

useful:

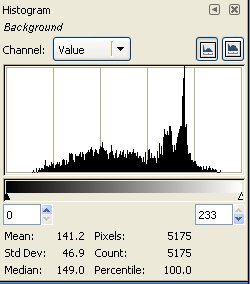

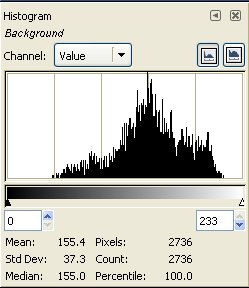

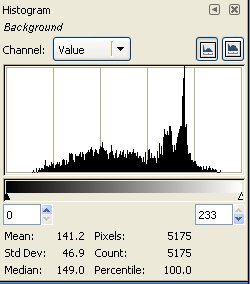

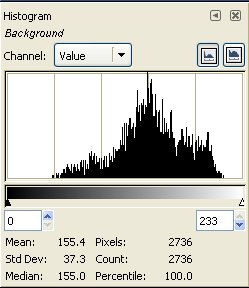

What is causing this discrepancy? It turns out that lighting conditions cause

the image values to vary greatly. Examining the histograms between the three

images you notice that the inside image has peaks in completely different areas

of the image and the difference between the outside and inside images is like

night and day (pun intended).

| Sample Face |

Outside Face |

|

|

And here is an image of me in front of a museum in Shang Hai where it found the

face no problem (with the color heuristic disabled):

(generated using: --findface china.tga eig10.face 0.4 0.5 0.05 mark 1 me.tga

256)

Bells and Whistles

- Implemented speed up that AMOST works!

- Implemented face color detection which works fabulously for the class images and is worthless for everything else. This makes the

class image processing REALLY REALLY fast (you can search from 0.1 to 1.0 by 0.1 in about 1 minute)!

- Implemented a simple speedup where you only walk every other pixel.

- Implemented verifyface, though haven't done the experiments.