Xiangyun Meng

Paul G. Allen School of Computer Science and Engineering, University of Washington

3800 E Stevens Way NE, Seattle WA 98195

xiangyun (at) cs (dot) washington (dot) edu

CVShort Bio

I obtained my bachelor degree from National University of Singapore (NUS). Currently I am a PhD candidate at University of Washington under Prof. Dieter Fox.

Research Interests

My research interests are in robot perception, planning and control. I am especially interested in developing algorithms and representations to seamlessly connect perception, planning and control to improve the capability and robustness of robots in the open world.

I have worked on several aspects of this theme in the context of robot navigation, agile locomotion, and manipulation. On the perception front, I have worked on scene representation for off-road driving and domain adaptation to improve generalization. For planning, I developed compact topological world models that do not require accurate geometric mapping. For control, I connect learning and physics, e.g. using imitation learning to learn controllers from vision, and using reinforcement learning to learn agile quadrupedal locomotion skills.

My focus has always been robust execution in the open world with minimum assumptions. Hence, having large-scale datasets for training has been crucial. Due to the steep cost of collecting world data, I invest in scaling up low-cost, high-quality data generation using photorealistic simulators, such as Gibson and Isaac Sim. I believe it will eventually allow us to train robots in simulation and deploy them in the real world. It also enables large-scale reproducible evaluation to find corner cases, a key aspect to improve the robustness of the robot, and make them safe and predictable in the real world.

Latest Projects

Robotic Autonomy in Complex Environments with Resiliency (RACER)

[news] [website] [promotional video] I am one of the perception leads of the UW RACER team. We are developing a self-driving system that enables a passenger-size vehicle to drive aggressively on complex terrain. We are conducting cutting-edge research in robotics and computer vision, and developing practical methods that enable a real vehicle to navigate at high speed in complex environments.

V-STRONG: Visual Self-Supervised Traversability Learning for Off-road Navigation

Sanghun Jung, JoonHo Lee, Xiangyun Meng, Byron Boots, and Alexander Lambert

ICRA 2024

[paper] We show how SAM (Segment Anything Model) can predict high-fidelity traversability maps from human driving. The key intuition is to leverage the masks produced by SAM as object priors. This allows us to sample positive and negative regions with object boundaries in mind, producing crispier traversability maps.

CAJun: Continuous Adaptive Jumping using a Learned Centroidal Controller

Yuxiang Yang, Guanya Shi, Xiangyun Meng, Wenhao Yu, Tingnan Zhang, Jie Tan, Byron Boots

CoRL 2023

[paper] [website] CAJun is a novel hierarchical learning and control framework that enables legged robots to jump continuously with adaptive jumping distances. With just 20 minutes of training on a single GPU, CAJun can achieve continuous, long jumps with adaptive distances on a Go1 robot with small sim-to-real gaps.

TerrainNet: Visual Modeling of Complex Terrains for High-speed, Off-road Navigation

Xiangyun Meng, Nathan Hatch, Alexander Lambert, Anqi Li, Nolan Wagener, Matthew Schmittle, JoonHo Lee, Wentao Yuan, Zoey Chen, Samuel Deng, Greg Okopal, Dieter Fox, Byron Boots, Amirreza Shaban

RSS 2023

[paper] TerrainNet learns to predict multi-layer terrain semantics and elevation features from multiple stereo cameras in a temporally consistent manner. It is fast and works well with off-the-shelf planners. It serves as one of the critical components in our autonomous driving stack in the DARPA RACER challenge.

Learning Semantic-Aware Locomotion Skills from Human Demonstration

Yuxiang Yang, Xiangyun Meng, Wenhao Yu, Tingnan Zhang, Jie Tan, Byron Boots

CoRL 2022

[paper] [blog] The semantics of the environment, such as the terrain type and property, reveals important information for legged robots to adjust their behaviors. In this work, we present a framework that learns semantics-aware locomotion skills from perception for quadrupedal robots, such that the robot can traverse through complex offroad terrains with appropriate speeds and gaits using perception information. Due to the lack of high-fidelity outdoor simulation, our framework needs to be trained directly in the real world, which brings unique challenges in data efficiency and safety. To ensure sample efficiency, we pre-train the perception model with an off-road driving dataset. To avoid the risks of real-world policy exploration, we leverage human demonstration to train a speed policy that selects a desired forward speed from camera image. For maximum traversability, we pair the speed policy with a gait selector, which selects a robust locomotion gait for each forward speed. Using only 40 minutes of human demonstration data, our framework learns to adjust the speed and gait of the robot based on perceived terrain semantics, and enables the robot to walk over 6km without failure at close-to-optimal speed.

Hierarchical Policies for Cluttered-Scene Grasping with Latent Plans

Lirui Wang, Xiangyun Meng, Yu Xiang, Dieter Fox

RAL + ICRA 2022

[paper] [code] 6D grasping in cluttered scenes is a longstanding problem in robotic manipulation. Open-loop manipulation pipelines may fail due to inaccurate state estimation, while most end-to-end grasping methods have not yet scaled to complex scenes with obstacles. In this work, we propose a new method for end-to-end learning of 6D grasping in cluttered scenes. Our hierarchical framework learns collision-free target-driven grasping based on partial point cloud observations. We learn an embedding space to encode expert grasping plans during training and a variational autoencoder to sample diverse grasping trajectories at test time. Furthermore, we train a critic network for plan selection and an option classifier for switching to an instance grasping policy through hierarchical reinforcement learning. We evaluate our method and compare against several baselines in simulation, as well as demonstrate that our latent planning can generalize to real-world cluttered-scene grasping tasks.

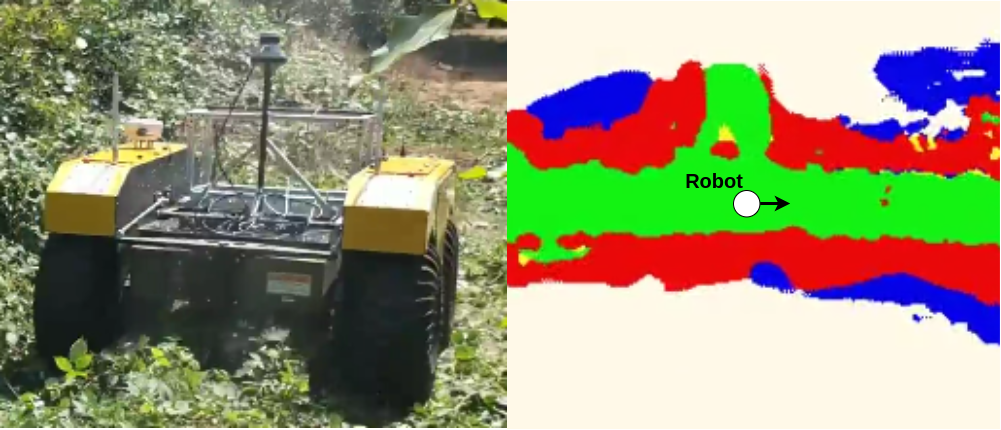

Semantic Terrain Classification for Off-road Autonomous Driving

Amirreza Shaban∗, Xiangyun Meng∗, JoonHo Lee∗, Byron Boots, Dieter Fox

∗Equal Contribution

CoRL 21

[paper] [website] [code] Producing dense and accurate traversability maps is crucial for autonomous off-road navigation. In this paper, we focus on the problem of classifying terrains into 4 cost classes (free, low-cost, medium-cost, obstacle) for traversability assessment. This requires a robot to reason about both semantics (what objects are present?) and geometric properties (where are the objects located?) of the environment. To achieve this goal, we develop a novel Bird’s Eye View Network (BEVNet), a deep neural network that directly predicts a local map encoding terrain classes from sparse LiDAR inputs. BEVNet processes both geometric and semantic information in a temporally consistent fashion. More importantly, it uses learned prior and history to predict terrain classes in unseen space and into the future, allowing a robot to better appraise its situation. We quantitatively evaluate BEVNet on both on-road and off-road scenarios and show that it outperforms a variety of strong baselines.

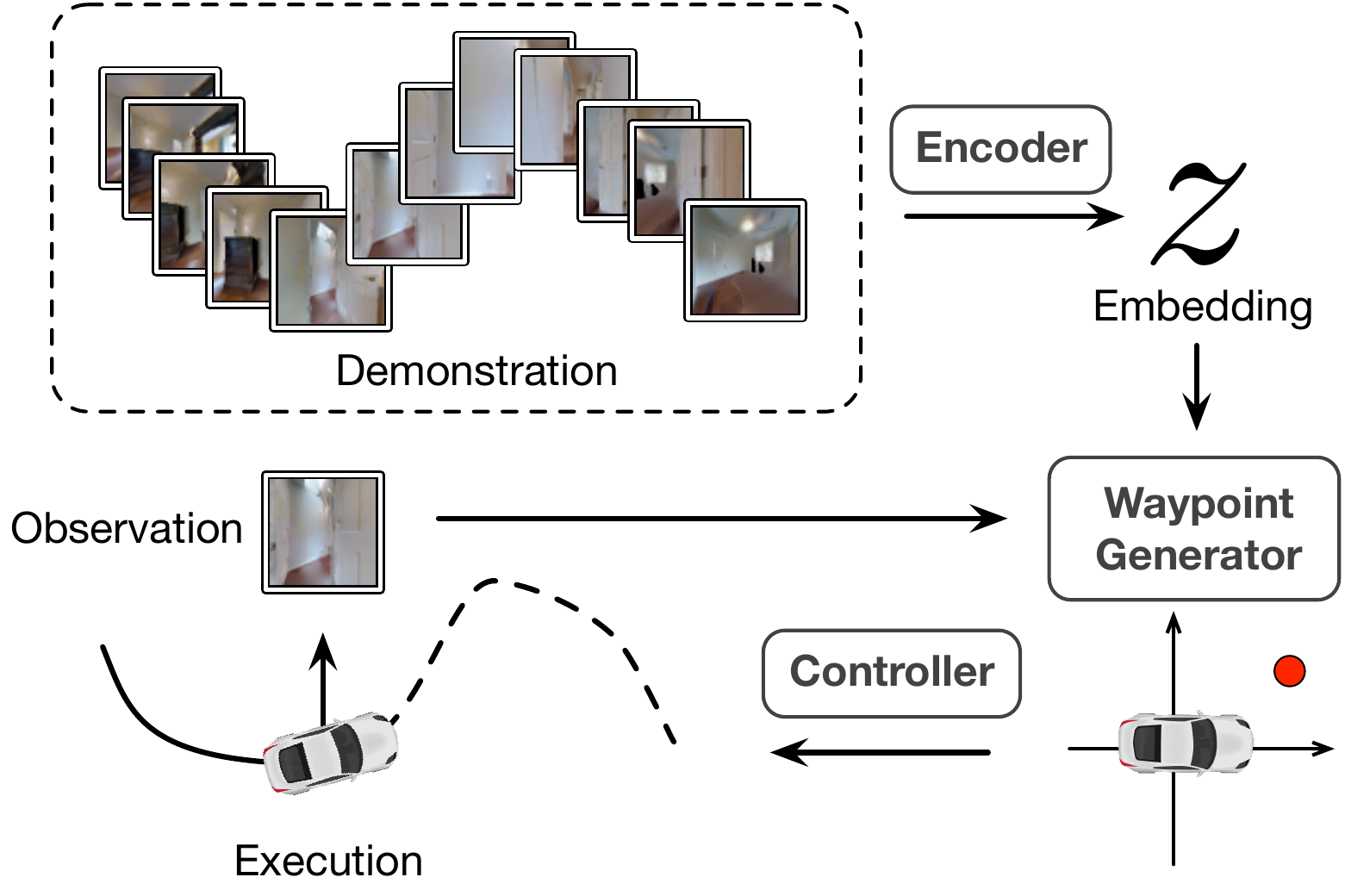

Learning Composable Behavior Embeddings for Long-horizon Visual Navigation

Xiangyun Meng, Yu Xiang and Dieter Fox

RA-L and ICRA 2021

[paper] [website] [code] Learning high-level navigation behaviors has important implications: it enables robots to build compact visual memory for repeating demonstrations and to build sparse topological maps for planning in novel environments. Existing approaches only learn discrete, short-horizon behaviors. These standalone behaviors usually assume a discrete action space with simple robot dynamics, thus they cannot capture the intricacy and complexity of real-world trajectories. To this end, we propose Composable Behavior Embedding (CBE), a continuous behavior representation for long-horizon visual navigation. CBE is learned in an end-to-end fashion; it effectively captures path geometry and is robust to unseen obstacles. We show that CBE can be used to performing memory-efficient path following and topological mapping, saving more than an order of magnitude of memory than behavior-less approaches.

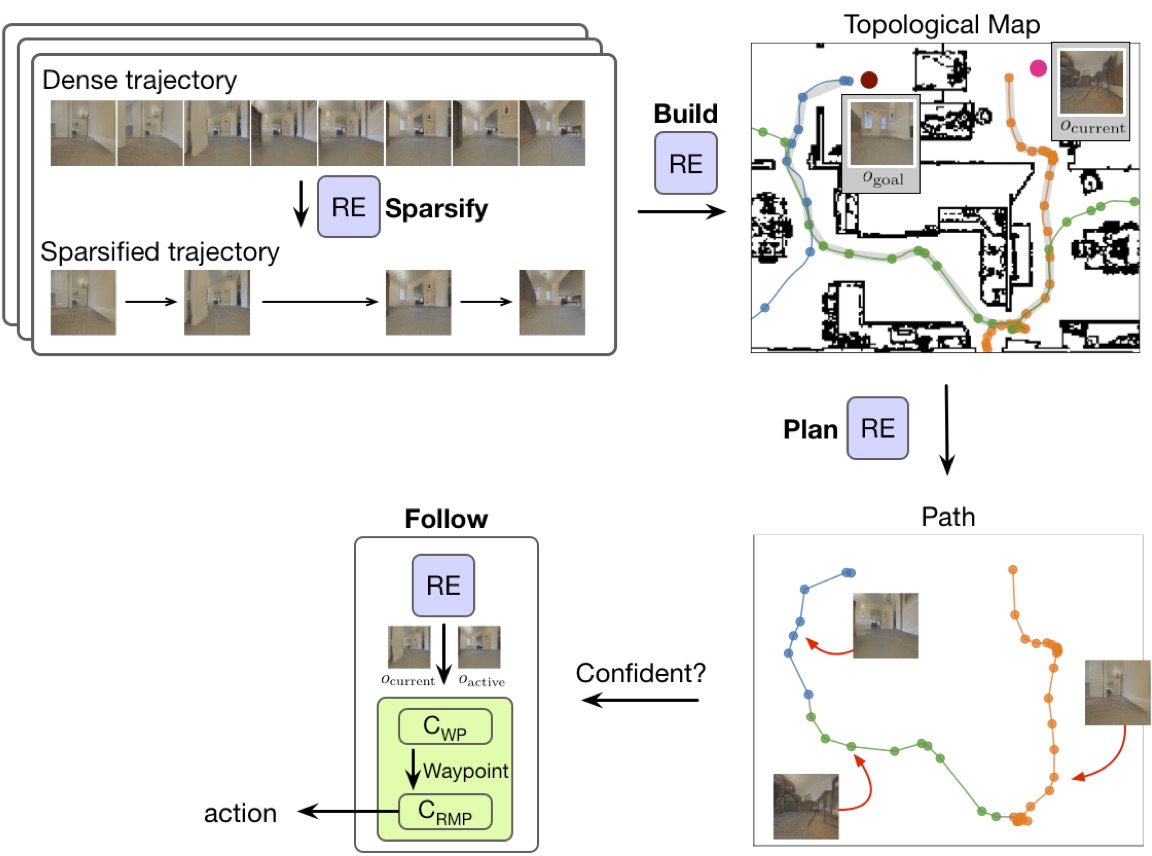

Scaling Local Control to Large Scale Topological Navigation

Xiangyun Meng, Nathan Ratliff, Yu Xiang and Dieter Fox

ICRA 2020

[paper] [website] [code] Visual topological navigation has been revitalized recently thanks to the advancement of deep learning that substantially improves robot perception. However, the scalability and reliability issue remain challenging due to the complexity and ambiguity of real world images and mechanical constraints of real robots. We present an intuitive solution to show that by accurately measuring the capability of a local controller, large-scale visual topological navigation can be achieved while being scalable and robust. Our approach achieves state-of-the-art results in trajectory following and planning in large-scale environments. It also generalizes well to real robots and new environments without finetuning.

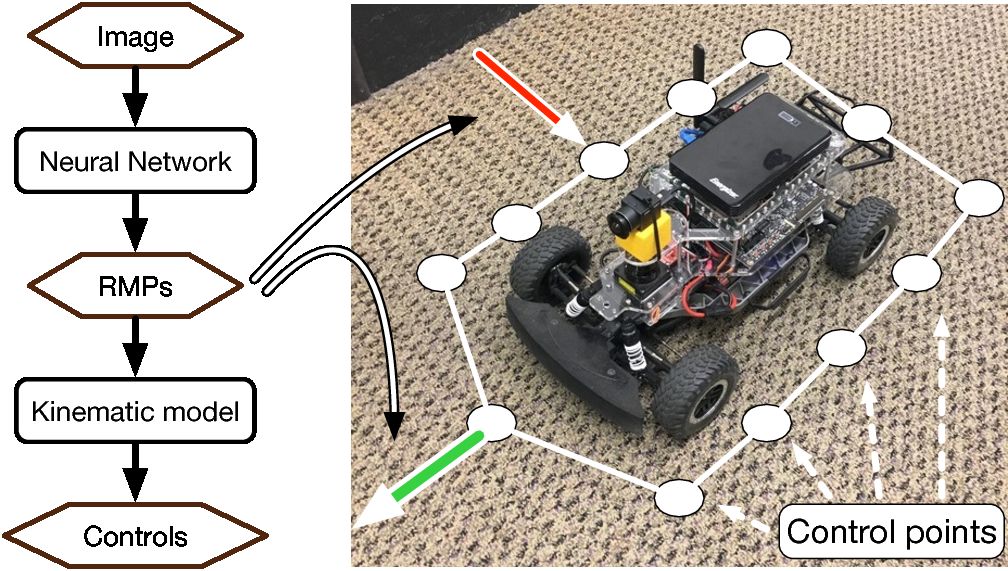

Neural Autonomous Navigation with Riemannian Motion Policy

Xiangyun Meng, Nathan Ratliff, Yu Xiang and Dieter Fox

ICRA 2019

[paper] [website] [code] End-to-end learning for autonomous navigation has received substantial attention recently as a promising method for reducing modeling error. However, its data complexity, especially around generalization to unseen environments, is high. We introduce a novel image-based autonomous navigation technique that leverages in policy structure using the Riemannian Motion Policy (RMP) framework for deep learning of vehicular control. We design a deep neural network to predict control point RMPs of the vehicle from visual images, from which the optimal control commands can be computed analytically. We show that our network trained in the Gibson environment can be used for indoor obstacle avoidance and navigation on a real RC car, and our RMP representation generalizes better to unseen environments than predicting local geometry or predicting control commands directly.

Fun Projects

A baseball bot trained in simulation using RL and transferred to a real PR2 robot

A neural policy enabling a 7-DoF robotic arm (the PR2 robot) to hit a high-speed ball (>8m/s) thrown at it, learned without supervision.

Policy is first learned through Reinforcement Learning in the MuJoCo simulator and later transferred to the real robot.

Real robot uses 30Hz depth images to estimate ball states. From the ball is thrown till the ball hits the robot, the robot only has about 0.3 seconds to react.

Surprisingly, the robot also learns to act like a Jedi!

Joint work with Boling Yang and Felix Leeb.

Pre-PhD Research Projects

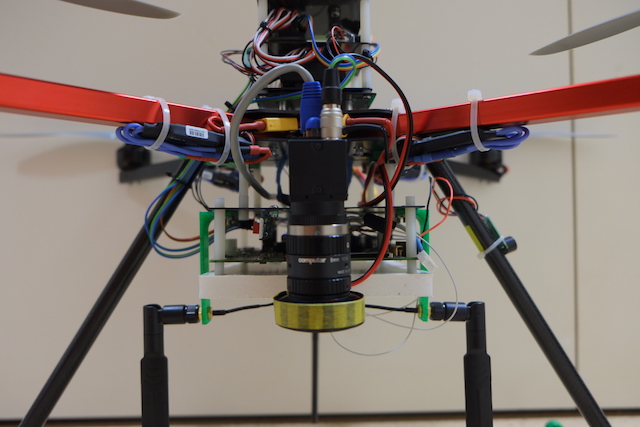

Most of the projects have a seperated web page showing more details (demo, images, videos, etc.). Enjoy!SkyStitch

ACM Multimedia 2015

SkyStitch is a multi-UAV based video surveillance system. Compared with a traditional video surveillance system that captures videos with a single camera, SkyStitch removes the constraints of field of view and resolution by deploying multiple UAVs to cover the target area. Videos captured from all UAVs are streamed to the ground station, which are stitched together to provide a panoramic view of the area in real time.

This is the biggest project I have ever accomplished. It consists of 16k lines of high-optimized code running on hetrogeneous processors (x86 & ARM CPU, desktop & mobile GPU, microcontroller on the flight controller, etc.). Moreover, there is tons of mechanical work to do. We built 4 generations of prototypes and had to deal with numerous number of crashes.

More information can be found on the project webpage.