In order for robots to safely operate in human environments, it is important that they are able to accurately track the pose of humans and other dynamic articulated objects in order to avoid collisions and safely perform physical interaction. The last several years have seen significant progress in using depth cameras for tracking articulated objects such as human bodies, hands, and robotic manipulators. Most approaches focus on tracking skeletal parameters of a fixed shape model, which makes them insufficient for applications that require accurate estimates of deformable object surfaces. To overcome this limitation, we present a 3D model-based tracking system for articulated deformable objects. Our system is able to track human body pose and high resolution surface contours in real time using a commodity depth sensor and GPU hardware.

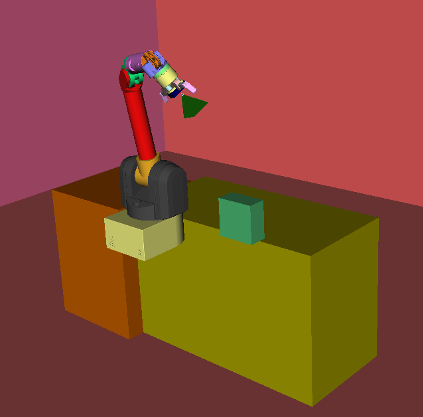

In this work, we explore the use of deep learning for learning a notion of physical intuition. We introduce SE3-Nets, which are deep networks designed to model rigid body motion from raw point cloud data. Based only on pairs of 3D point clouds along with a continuous action vector and point wise data associations, SE3-Nets learn to segment effected object parts and predict their motion resulting from the applied force. We show that the structure underlying SE3-Nets enables them to generate a far more consistent prediction of object motion than traditional flow based networks, on three simulated scenarios.

In order to function in unstructured environments, robots need the ability to recognize unseen novel objects. We take a step in this direction by tackling the problem of segmenting unseen object instances in tabletop environments. However, the type of large-scale real-world dataset required for this task typically does not exist for most robotic settings, which motivates the use of synthetic data. We propose a novel method that separately leverages synthetic RGB and synthetic depth for unseen object instance segmentation. Our method is comprised of two stages where the first stage operates only on depth to produce rough initial masks, and the second stage refines these masks with RGB. Surprisingly, our framework is able to learn from synthetic RGB-D data where the RGB is non-photorealistic. To train our method, we introduce a large-scale synthetic dataset of random objects on tabletops. We show that our method, trained on this dataset, can produce sharp and accurate masks, outperforming state-of-the-art methods on unseen object instance segmentation. We also show that our method can segment unseen objects for robot grasping. Code, models and video can be found at

https://rse-lab.cs.washington.edu/projects/unseen-object-instance-segmen....

Estimating the 6D pose of objects from images is an important problem in various applications such as robot manipulation and

virtual reality. While direct regression of images to object poses has limited accuracy, matching rendered images of an object against the input

image can produce accurate results. In this work, we propose a novel deep neural network for 6D pose matching named DeepIM. Given an initial

pose estimation, our network is able to iteratively refine the pose by matching the rendered image against the observed image. The network

is trained to predict a relative pose transformation using an untangled representation of 3D location and 3D orientation and an iterative training

process. Experiments on two commonly used benchmarks for 6D pose estimation demonstrate that DeepIM achieves large improvements over

state-of-the-art methods. We furthermore show that DeepIM is able to match previously unseen objects.

Estimating the 6D pose of known objects is important for robots to interact with the real world. The problem is challenging due to the variety of objects as well as the complexity of a scene caused by clutter and occlusions between objects. In this work, we introduce PoseCNN, a new Convolutional Neural Network for 6D object pose estimation. PoseCNN estimates the 3D translation of an object by localizing its center in the image and predicting its distance from the camera. The 3D rotation of the object is estimated by regressing to a quaternion representation. We also introduce a novel loss function that enables PoseCNN to handle symmetric objects. In addition, we contribute a large scale video dataset for 6D object pose estimation named the YCB-Video dataset. Our dataset provides accurate 6D poses of 21 objects from the YCB dataset observed in 92 videos with 133,827 frames. We conduct extensive experiments on our YCB-Video dataset and the OccludedLINEMOD dataset to show that PoseCNN is highly robust to occlusions, can handle symmetric objects, and provide accurate pose estimation using only color images as input. When using depth data to further refine the poses, our approach achieves state-of-the-art results on the challenging OccludedLINEMOD dataset.

In this project, we propose a novel crowdsourcing system for inferring hierarchies of concepts, tackling the questions posed above. We develop a principled algorithm powered by the crowd, which is robust to noise, efficient in picking questions, cost-effective, and builds high quality hierarchies.

This project explores an approach towards teaching manipulation tasks to robots via human demonstrations. A human demonstrates the desired task (say, carrying a cup of water without spilling) by physically moving the robot. Given many such kinesthetic demonstrations, the robot applies a learning algorithm to learn a model of the underlying task. In a new scene, the robot uses this task-model to plan a path that satisfies the task requirements.

This project aims to provide a unified framework for tracking any arbitrary articulated model, given it's geometric and kinematic structure. Our approach uses dense input data (computing an error term on every pixel), which we are able to process in real-time by leveraging the power of GPGPU programming and very efficient representation of model geometry with signed distance functions.

A number of long-term goals in robotics, for example, using robots in household settings; require robots to interact with humans. In this project, we explore how robots can learn to correlate natural language to the physical world being sensed and manipulated, an area of research that falls under grounded language acquisition.

Hierarchical Matching Pursuit uses sparse coding to learn codebooks at each layer in an unsupervised way and then builds hierarchial feature representations from the learned codebooks. It achieves state-of-the-art results on many types of recognition tasks.

The RGB-D Object Dataset is a large dataset of 300 common household objects. The objects are organized into 51 categories arranged using WordNet hypernym-hyponym relationships (similar to ImageNet). This dataset was recorded using a Kinect style 3D camera that records synchronized and aligned 640x480 RGB and depth images at 30 Hz.

Simultaneous localization and mapping (SLAM) has been a major focus of mobile robotics work for many years. We combine state-of-the-art visual odometry and pose-graph estimation techniques with a combined color and depth camera to make accurate, dense maps of indoor environments.

In this project we address joint object category, instance, and pose recognition in the context of rapid advances of RGB-D cameras that combine both visual and 3D shape information. The focus is on detection and classification of objects in indoor scenes, such as in domestic environments

We introduce an approach for identifying objects based on natural language containing appearance and name attributes.

This project aims at developing and applying novel reinforcement learning methods to low-cost off-the-shelf robots to make them learn tasks in a few trials only.

We address the problem of active object investigation using robotic manipulators and Kinect-style RGB-D depth sensors. To do so, we jointly tackle the issues of sensor to robot calibration, manipulator tracking, and 3D object model construction. We additionally consider the problem of motion and grasp planning to maximize coverage of the object.

We segment objects during scene reconstruction rather than after as is usual. The emphasis is on merging information we get from different points in time to improve existing object and scene models.

The goal of this project is to integrate Gaussian process prediction and observation models into Bayes filters. These GP-BayesFilters are more accurate than standard Bayes filters using parametric models. In addition, GP models naturally supply the process and observation noise necessary for Bayesian filters.

We can't be sure where objects are unless we see them move relative to each other. In this project we investigate using motion as a cue to segment objects. We can make use of passive sensing or active vision, and both long-term and short-term motion, to aid segmentation.