CSE has many file servers providing storage for different purposes. Much of that storage is offered on our network in a single global namespace, which allows users to access the files without knowing where they live using names that suggest what they are for. This document explains how that namespace is structured and how to access it.

Terminology

Files are collected into collections called "file systems," which may range from a few GB to a few TB. Typically, a file system will have both a local name and a global, or "canonical" name. The great thing about the canonical name is that the same name works both on the server where the file is local and everywhere else that is configured to have access to it. We are concerned here with the canonical names.

When a file system is available on the network- not all are- we say that it's "exported."

As a research unit at a university, we have both an instructional mission and a research mission. Resources that are used for research purposes are on "research" machines and resources that are used for instruction are on "instructional" machines. In general, research resources are exported to lab-supported research machines, and instructional resources are exported to both instructional and lab-supported research machines, as are administrative resources.

Naming

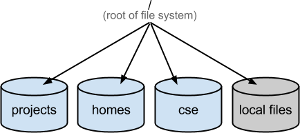

There are only a few branches off the root of our global file system, described here.

Projects

Research and instructional projects are stored under /projects/. For example, the OneBusAway research project has its file content stored in /projects/onebusaway/. Some projects are rapacious enough that they have multiple projects file systems- the Software Engineering and Programing Languages group an example, with projects swlab1 through swlab2.

Research project content is available only on research machines.

Instructional project content is under /projects/instr/. For example, projects for Spring 2013 can be found in the /projects/instr/13sp/ tree.

This content is available on both research and instructional machines.

Homes

Everybody has at least, and typically exactly, one home directory in the global file space. Users with "research accounts" have a home directory with a name like /homes/gws/<user>, while users with instructional accounts have one under /homes/iws/<user>. (GWS stands for "graduate work station," somewhat of a misnomer. IWS? For "instructional work station.").

You already know: GWS homes are exported only to lab-supported research machines. IWS homes are exported to instructional machines.

There are other branches in the /homes/ that are used for special purposes. For example, /homes/cubist provides a path to home directories on cubist.cs, an instructional projects and web service machine.

CSE

The /cse/ tree is for administrative and miscelleaneous content. For example, we use /cse/web/ for web content. /cse/web/courses/ is course web content and /cse/web/research/ is a shared space for research web content. I could tell you where this is exported, but you already know.

Where Is It Hosted?

One of the beauties of the global file system is that you don't need to know where something is stored... except, rarely, when you do. For example, the lab might announce scheduled maintenance of a file server, and you'd like to know if your home directory will be affected. One quick way to tell where something is hosted is with the df command, which you can execute at a Linux command prompt:

% df /homes/gws/farsworth Filesystem 1K-blocks Used Available Use% Mounted on cash:/m-u1/u1/farnsworth 8139077632 51748864 8087328768 1% /homes/gws/farnsworth

Here, we learn that user farnsworth has her home directory on a machine called cash.cs. This works with stuff in /projects and (less usefully) /cse, too.

Accessing Files

Linux

File systems are available at their "native" paths on Linux machines to which they are exported. For example, /cse/www is available at that path.

More information on working from Linux machines is collected in Unix/Linux Resources.

Windows

Windows machines access file systems via compatibility software called "Samba" that provides a slightly different root to the file system tree. An example is o:\cs\unix\homes\gws (or, equivalently, \\cseexec.cs.washington.edu\cs\unix\homes\gws), which corresponds to the canonical path /homes/gws

More information on working with files from Windows machines is collected in Windows Resources; see in particular Network File Access and Remote Access.

MacOS

Information on working with files from MacOS machines is in the Network File Access section of Macintosh Resources.

Under the Hood

There are hundreds of files systems on dozens of file servers, and hundreds of clients. File systems come and go regularly, sometimes moving from one file server to another. Less often, file servers are replaced, retired, or renamed and new ones are deployed. Clients also come and go, and change status. Yet somehow those changes are known everywhere they need to be known. And somehow those hundreds of file systems are always there when you need them.

The problem of passing the information around to the clients and servers is a "configuration management" problem. There are files passed around by the configuration management system that say which clients are authorized to mount which file systems, and there are files that say which file systems are available to be mounted, from where.

To avoid mounting hundreds of file systems, some of which are rarely or never used on a particular client, something called an "automounter" is used. This is a service that sits between the file servers and the clients and mounts file systems as they are needed, discarding each mount when it has been idle for a few minutes. Attempting to open a file on an unmounted file system will succeed, but there will be a silent mount first. It happens fast enough that you won't notice.

On Linux machines, the available file systems are listed in a set of files with names matching the pattern /etc/auto.*. For example, /homes/gws is defined in /etc/auto.homes.gws and /projects is defined in /etc/auto.projects and friends. Those files are in a very simple human-readable format, so you can look inside to see what's provided where. For example, here's how to find out where /projects/compression2 lives:

% egrep ^compression2 /etc/auto.projects compression2 portal:/m-compression2/&

The answer is "on portal.cs."