We address the problem of active object investigation using robotic manipulators and Kinect-style RGB-D depth sensors. To do so, we jointly tackle the issues of sensor to robot calibration, manipulator tracking, and 3D object model construction. We additionally consider the problem of motion and grasp planning to maximize coverage of the object.

Tracking and Modeling

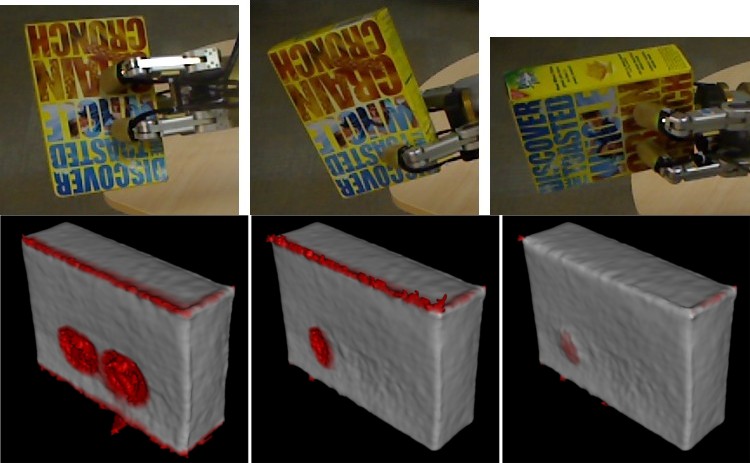

Due to the large end-effector errors experienced by our robot, we must perform articulated pose estimation in conjunction with object modeling. We combine a novel variant of the Articulated ICP algorithm with an elegant, Kalman filter-based framework for state estimation.Shown in the video below are the tracking system on the left (model in red, sensor data in true color), and the surfel-based reconstruction on the right.

Next Best View Planning

We developed a novel information-based variant of the next best view algorithm. We use this for trajectory and grasp planning to maximize the coverage of the object when modeling.The video below demonstrates the next best view procedure for a single grasp.

Results

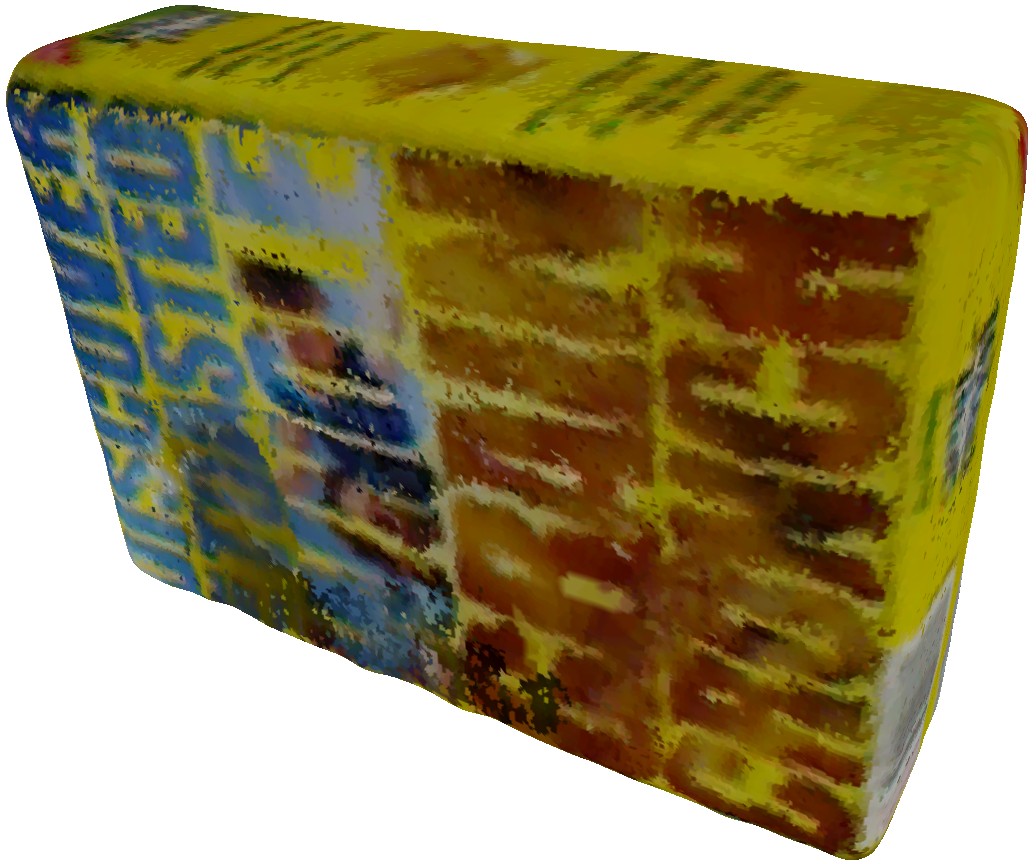

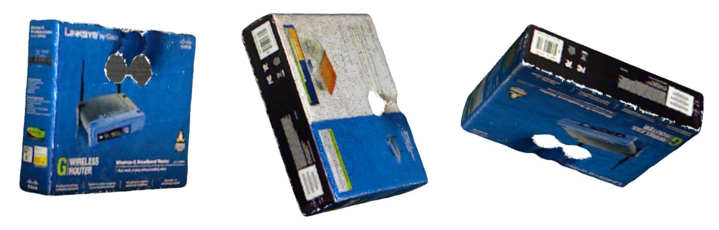

These first results are with a single grasp only and without next best view motion planning. They have been converted to meshes using the Poisson Reconstruction algorithm. (Click for videos of the meshed models)

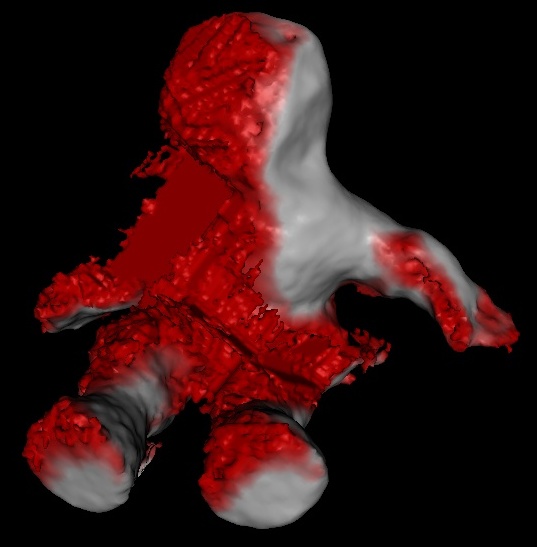

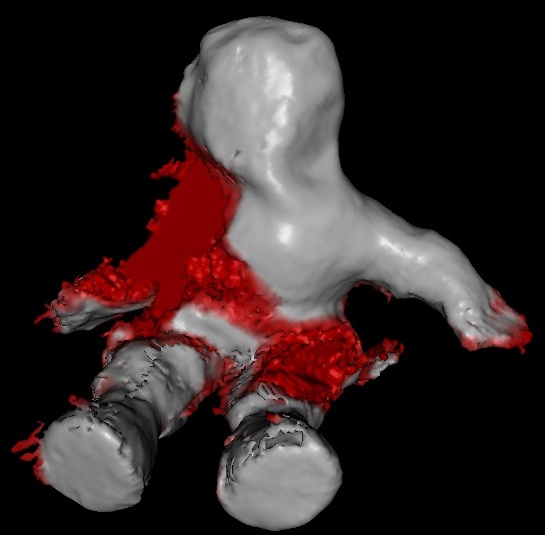

Next are examples of object models before and after next best view planning. Red areas indicate low confidence regions, having few to no observations (Click for 3D ply models).

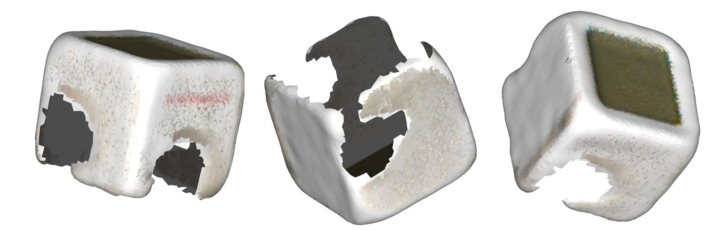

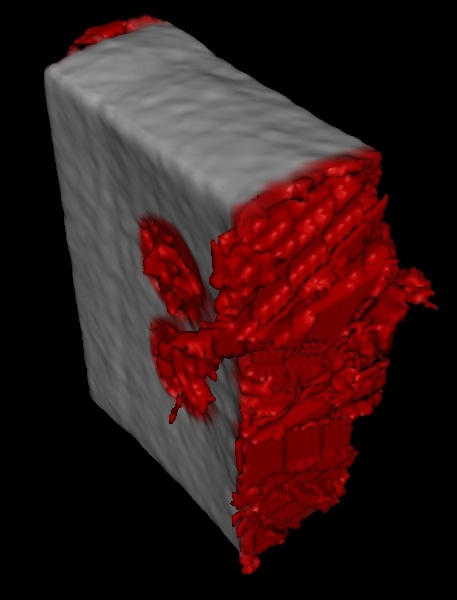

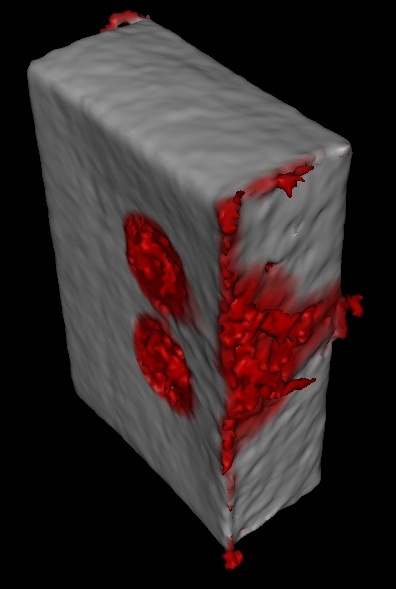

Shown below are 3 grasps of a box (with corresponding confidence models) as well as the resulting final model.